Bootstrap Consensus Networks

a method of clustering

Abstract

The aim of this project (it started as an ad-hoc idea, and then turned into something bigger…) is to discuss reliability issues of a few visual techniques used in stylometry, and to introduce a new method that enhances the explanatory power of visualization with a procedure of validation inspired by advanced statistical methods. Since it is based on the idea of Bootstrap Consensus Trees (Eder, 2013), it has been named accordingly: Bootstrap Consensus Networks.

The following description is but an appetizer. For a detailed explanation of the method’s theoretical assumptions, features, and implementation refer to the following paper:

Eder, M. (2017). Visualization in stylometry: cluster analysis using networks. Digital Scholarship in the Humanities, 32(1): 50-64, [pre-print].

Motivation

Most unsupervised (explanatory) methods used in stylometry, such as principal components analysis, multidimensional scaling or cluster analysis, lack an important feature: it is hard to assess the robustness of the estimated model. While supervised classification methods exploit the idea of cross-validation, unsupervised clustering techniques remain, well…, unsupervised. On the other hand, the results obtained using these techniques “speak for themselves”, which gives a practitioner an opportunity to notice with the naked eye any peculiarities or unexpected behavior in the analyzed corpus. Also, given a tree-like graphic representation of similarities between particular samples, one can easily interpret the results in terms of finding out the group of texts to which a disputed sample belongs.

However, one of the biggest problems with cluster analysis is that it highly depends on the number of analyzed features (e.g. frequent words), the linkage algorithm, and the distance measure applied. Deciding which of the parameters reveal the actual separation of the samples and which show fake similarities is not trivial at all. Worse, generating hundreds of dendrograms covering the whole spectrum of MFWs, a variety of linkage algorithms, and a number of distance measures, would make this choice even more difficult. At this point, a stylometrist inescapably faces the above-mentioned cherry-picking problem (Rudman, 2003). When it comes to choosing a plot that is the most likely to be “true”, scholars are often in danger of more or less unconsciously picking one that looks more reliable than others, or simply one that confirms their hypotheses.

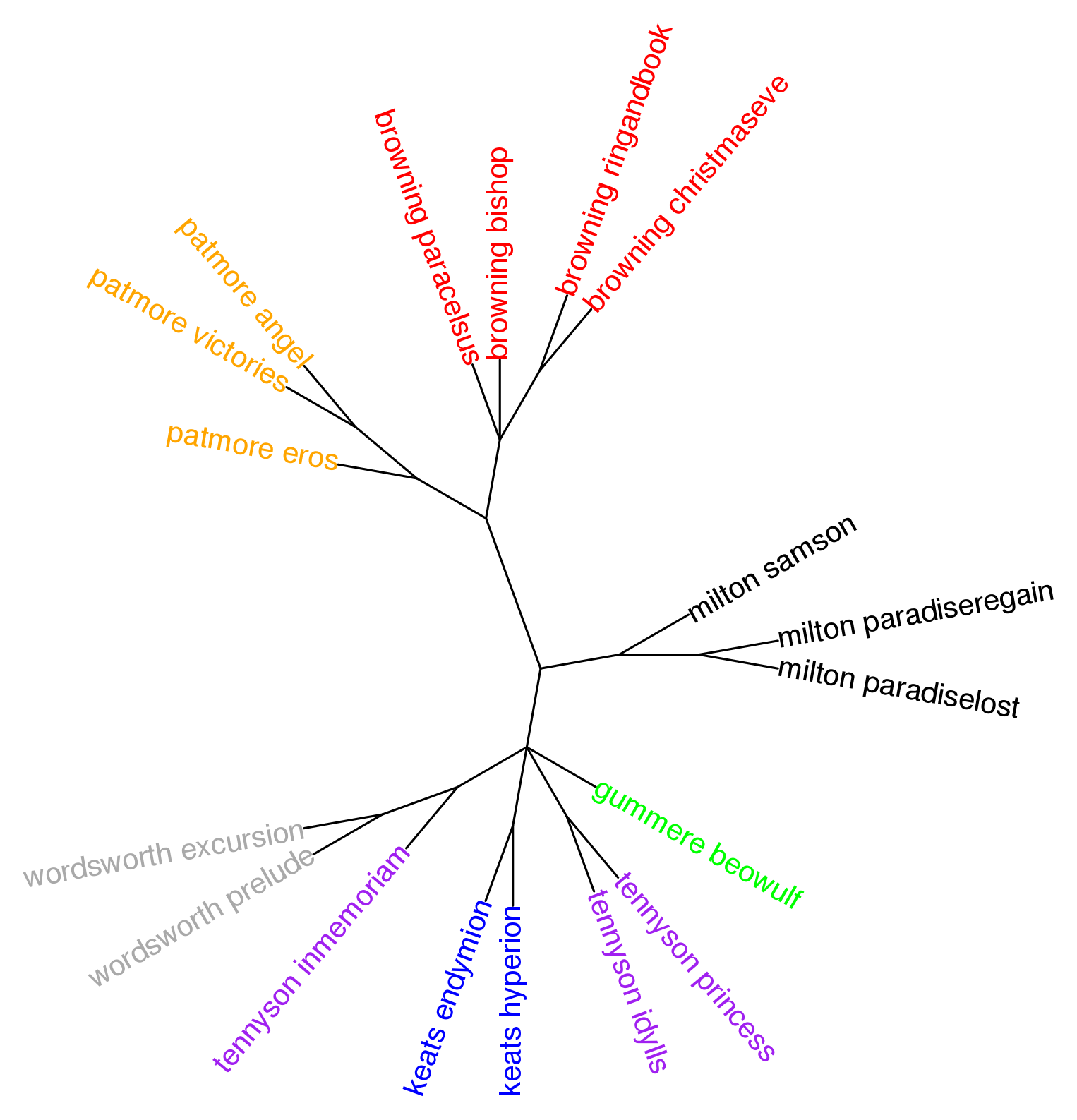

Bootstrap consensus trees

A partial solution of the cherry-picking problem involves combining the information revealed by numerous dendrograms into a single consensus plot. This technique has been developed in phylogenetics (Paradis et al., 2004) and later used to assess differences between Papuan languages (Dunn et al., 2005). It has also been introduced to stylometry (Eder, 2013) and applied in a number of stylometric studies (many of them mentioned elsewhere on this website). This approach assumes that, in a large number of “snapshots” (e.g. for 100, 200, 300, 400, …, 1,000 MFWs), actual groupings tend to reappear, while more accidental similarities will occur only on few graphs. The goal, then, is to capture the robust patterns across a set of generated snapshots. The procedure is aimed at producing a number of virtual dendrograms, and then at evaluating robustness of groupings across these dendrograms (Fig. 2).

Bootstrap consensus networks

Although the problem of unstable results can be partially by-passed using consensus techniques, two other issues remain unresolved. Firstly, when the number of analyzed texts exceeds a few dozen, the consensus tree becomes considerably cluttered. Secondly, the procedure of hammering out the consensus is aimed at identifying nearest neighbors only, which means extracting the strongest patterns (usually, the authorial signal) and filtering out weaker textual similarities. To overcome the two aforementioned issues, two tailored algorithms have been introduced.

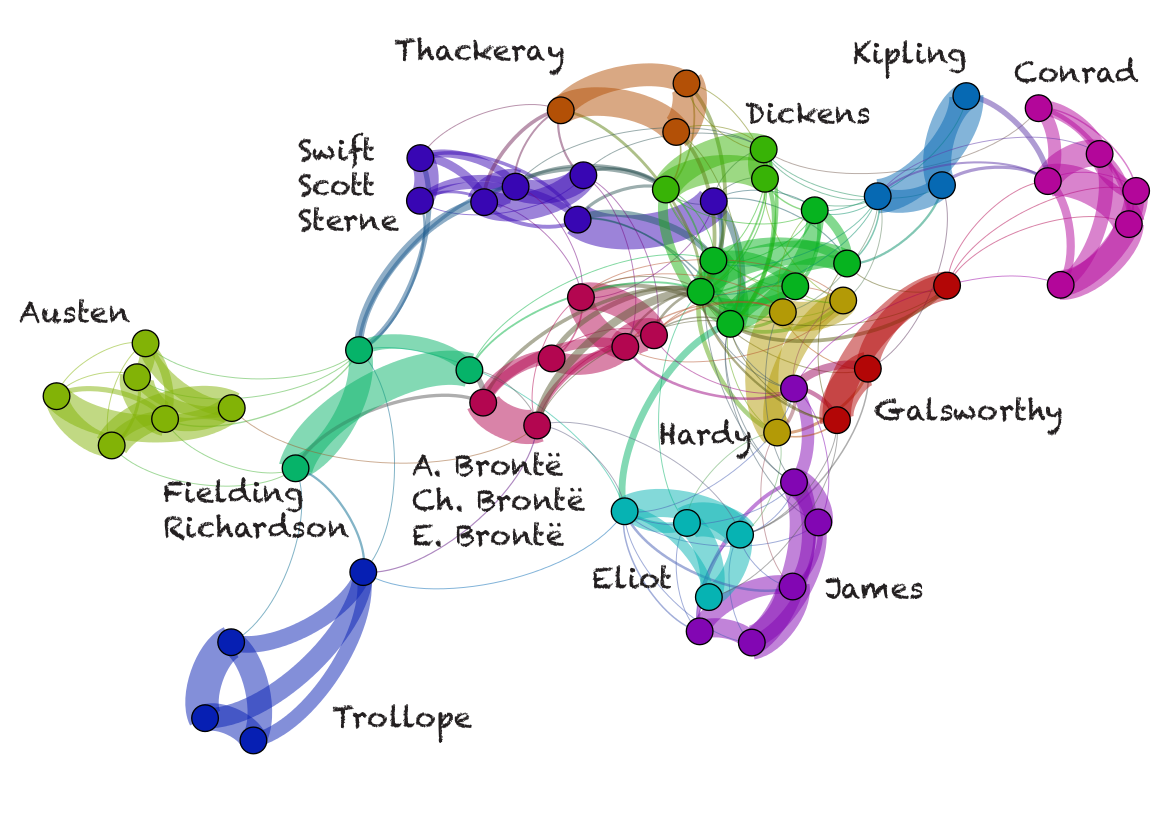

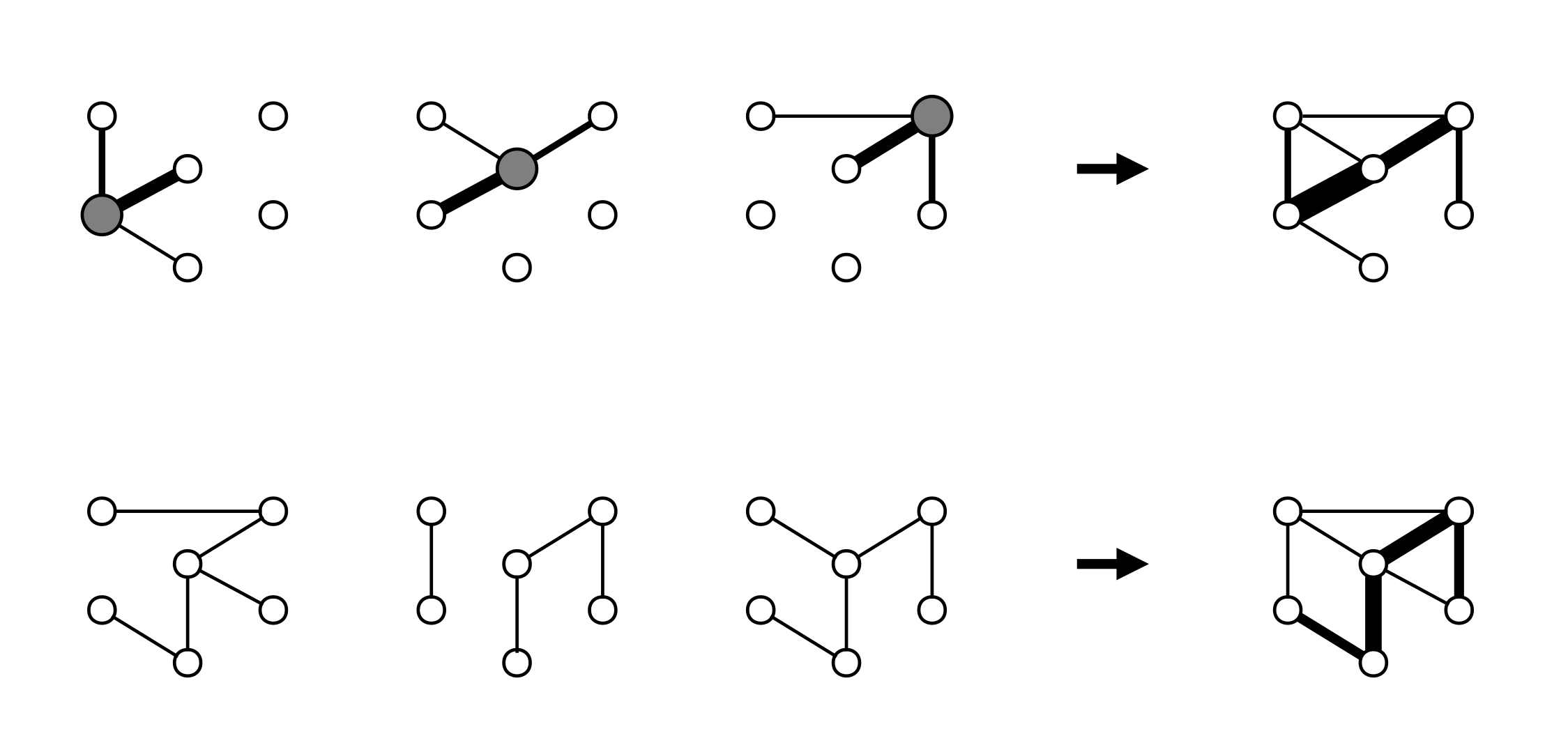

Let the first algorithm establish for every single node a strong connection to its nearest neighbor (i.e. the most similar text) and two weaker connections to the 1st and the 2nd runner-up (Fig. 3, top). Consequently, the final network will contain a number of weighted links, some of them being thicker (close similarities), some other revealing weaker connections between samples. The second algorithm (Fig. 3, bottom) is aimed at overcoming the problem of unstable results. It is an implementation of the idea of consensus dendrograms, as discussed above, into network analysis. The goal is to perform a large number of tests for similarity with different number of features analyzed (e.g. 100, 200, 300, …, 1,000 MFWs). Finally, all the connections produced in particular “snapshots” are added, resulting in a consensus network. Weights of these final connections tend to differ significantly: the strongest ones mean robust nearest neighbors, while weak links stand for secondary and/or accidental similarities. Validation of the results – or rather self-validation – is provided by the fact that consensus of many single approaches to the same corpus sanitizes robust textual similarities and filters out accidental clusterings.

What’s the added value here? Why include not only the first-ranked candidate (i.e. the nearest neighbor), but also two runners-ups? Why bother? Frankly, the idea might seem a bit odd indeed. However, this is exactly the reason why this method proves so powerful. Since it is not surprising to see Tristam Shandy by Sterne linked to Sentimental Journey by the same author, it is much more interesting to learn that next similar authors are Swift and Scott. It can be represented as a network of mutual similarities, where some of the connections are very strong, and some other remain ethereal. More specifically, the algorithms used in Bootstrap Consensus Networks are, in a way, equivalent to the k-NN classifier with k = 3.

Establishing the links between the texts is undoubtedly the most important part of the procedure. However, the final step is to arrange the nodes on a plane in such a way that they reveal as much information about linkage as possible. There is a number of out-of-the-box layout algorithms reported in the literature. The members of the Computational Stylistics Group, however, usually choose one of the force-directed layouts: ForceAtlas2 embedded in Gephi, an open-source tool for network manipulation and visualization (Bastian et al., 2009).

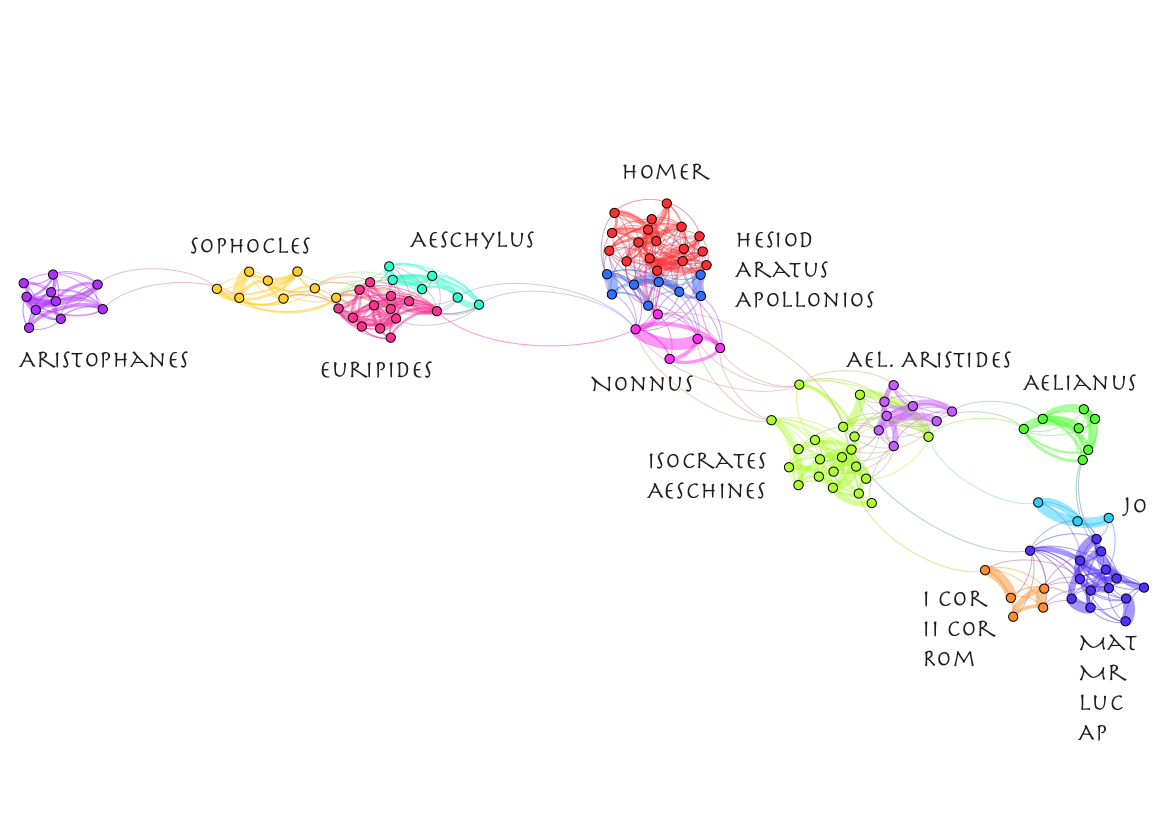

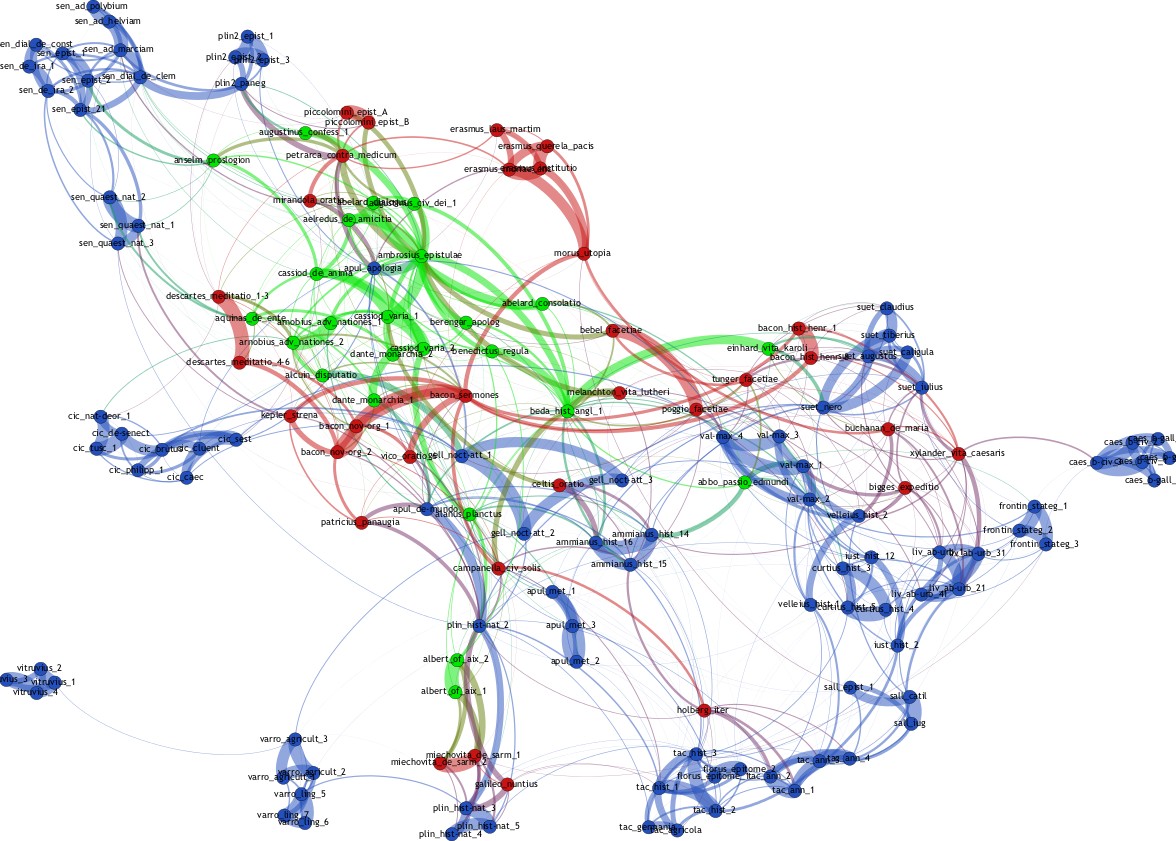

The networks are quite neat as they are, but at the same time they can be enhanced by adding more information. E.g., one can perform Modularity Detection as implemented in Gephi, and color the nodes according to their modularity class. Fig. 4 shows a network of 124 Ancient Greek texts – the identified modularity classes cluster texts written by stylistically similar authors.

Applications

Bootstrap consensus networks prove to be very attractive in any “distant reading” approaches to literature, or experiments in which one tries to capture a broader picture of literary periods, genres, and so forth. Below (Fig. 5), a large-ish corpus of 150 Latin texts is shown, where the colors represent three different periods of Latin literature. As you can see, there is some separation between ancient, medieval, and early modern texts, although the separation is not clear-cut. This example comes from a study on distant reading and style variation in Latin (Eder, 2016).

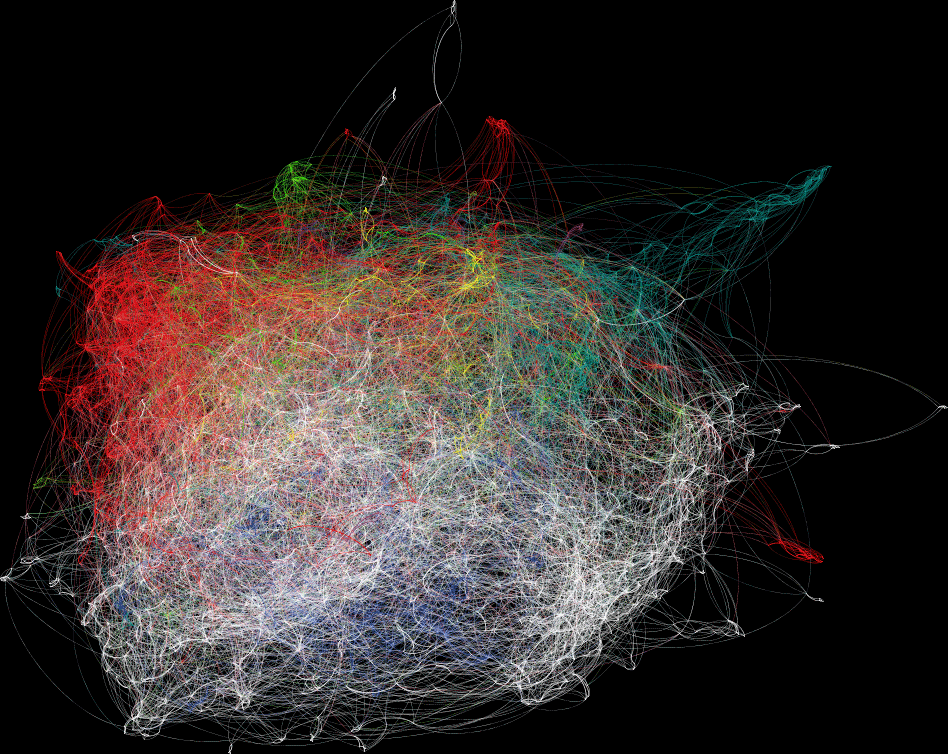

What about a really large-scale experiment? Here’s a network of 2,000 novels, produced by Jan Rybicki (Fig. 6). It consists of 1,000 novels written originally in Polish, and 1,000 translations into Polish from a few other languages, including English, Russian, and French. Refer to this post for more details!

The method has been quite extensively applied by the members of the Computational Stylistics Group. Some of the references can be found at the bottom of this post; vast majority of the applications are listed in the publications section of this website.

References

Bastian, M., Heymann, S. and Jacomy, M. (2009). Gephi: an open source software for exploring and manipulating networks. In: International AAAI Conference on Weblogs and Social Media, http://www.aaai.org/ocs/index.php/ICWSM/09/paper/view/154.

Dunn, M., Terrill, A., Reesink, G., Foley, R. and Levinson, S. (2005). Structural phylogenetics and the reconstruction of ancient language history. Science, 309: 2072–75.

Eder, M. (2013). Computational stylistics and Biblical translation: how reliable can a dendrogram be? In: T. Piotrowski and Ł. Grabowski (eds.) The translator and the computer. Wrocław: WSF Press, pp. 155–170, [pre-print].

Eder, M. (2014a). Stylometry, network analysis and Latin literature. In: Digital Humanities 2014: Book of Abstracts, EPFL-UNIL, Lausanne, pp. 457–58, http://dharchive.org/paper/DH2014/Poster-324.xml, [the poster itself].

Eder, M. (2014b). Metody ścisłe w literaturoznawstwie i pułapki pozornego obiektywizmu - przykład stylometrii. Teksty Drugie, 2: 90–105.

Eder, M. (2017). Visualization in stylometry: cluster analysis using networks. Digital Scholarship in the Humanities, 32(1): 50–64, [pre-print].

Eder, M. (2016). A bird’s eye view of early modern Latin: distant reading, network analysis and style variation. In: M. Ullyot, D. Jakacki, and L. Estill (eds.), Early Modern Studies and the Digital Turn. Toronto and Tempe: Iter and ACMRS, pp. 63-89 (New Technologies in Medieval and Renaissance Studies, vol. 6), http://ems.itercommunity.org, [pre-print].

Paradis, E., Claude, J. and Strimmer, K. (2004). APE: analyses of phylogenetics and evolution in R language. Bioinformatics, 20: 289–90.

Rudman, J. (2003). Cherry picking in nontraditional authorship attribution studies. Chance, 16: 26–32.

Rybicki, J. (2016). Vive la différence: Tracing the (authorial) gender signal by multivariate analysis of word frequencies. Digital Scholarship in the Humanities, 31(4): 746–61 [pre-print].

Rybicki, J., Eder, M. and Hoover, D. (2016). Computational stylistics and text analysis. In: C. Crompton, R. L. Lane and R. Siemens (eds.), Doing Digital Humanities, London and New York: Routledge, pp. 123–144.