A simple idea…

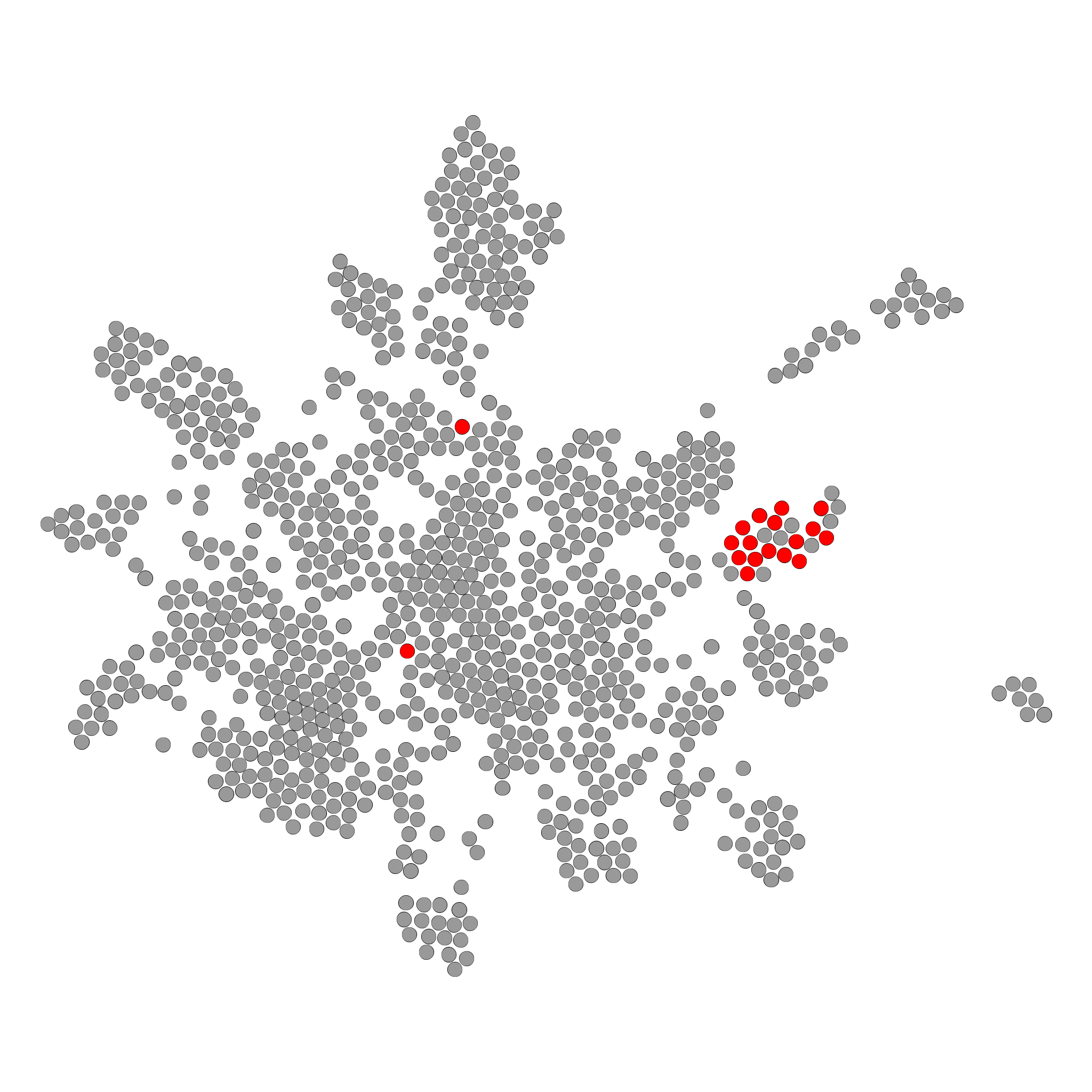

I have just returned from a visit to my landlord – the solitary neighbour that I shall be troubled with.

neighbour solitary troubled landlord visit returned just shall from I have be with my that a to the

Maciej Eder

Introduction to Distributional Semantics (1) Topic Modeling

Maciej Eder

Institute of Polish Language (Polish Academy of Sciences)

IQLA-GIAT Summer School, Padova, 27.07.2021

The meaning of words lies in their use.

(Wittgenstein 1953: 80, 109)

You shall know a word by the company it keeps.

(Firth 1962: 11)

heathcliff, linton, catherine, hareton, earnshaw, cathy, edgar, ellen, heights, hindley, nelly, ll, grange, i, wuthering, t, joseph, isabella, master, gimmerton, zillah, m, exclaimed, he, thrushcross, and, answered, yah, kenneth, ve, maister, lockwood, kitchen, you, dean, moors, replied, cried, him, muttered, lintons, papa, she, till, commenced, on, wer, ech, shoo, leant, hearth, bonny, door, stairs, hell, me, crags, moor, wouldn, fiend, settle, jabez, penistone, fire, ye, its, bid, nowt, naught, yer, hush, mistress, grew, lad, compelled, minny, won, hisseln, skulker, soa, wisht, cousin, lattice, didn, yon, minute, lass, needn, inquired, snow, branderham, flaysome, gooid, sud, thear, affirming, interrupted, couldn, window, …

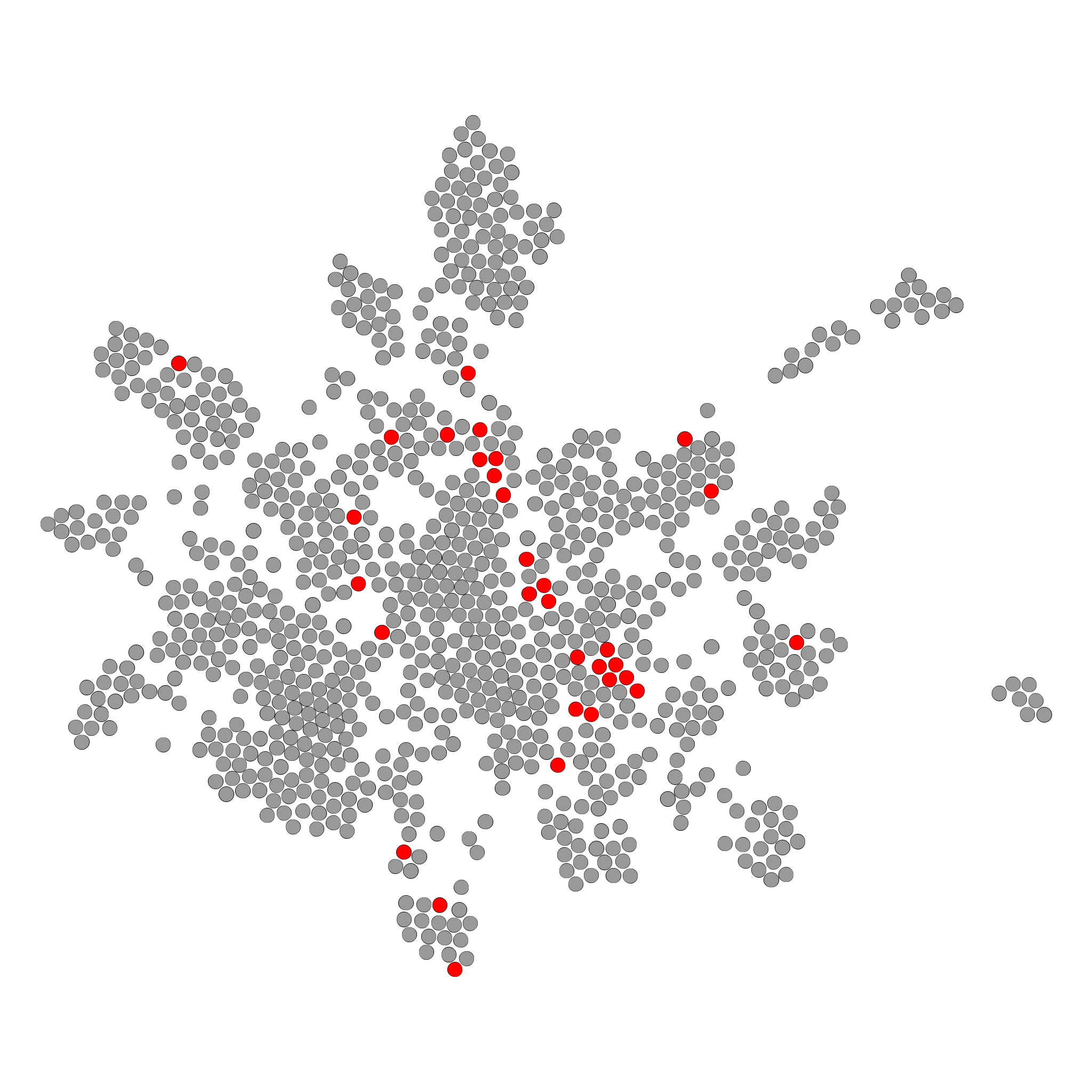

I have just returned from a visit to my landlord – the solitary neighbour that I shall be troubled with.

neighbour solitary troubled landlord visit returned just shall from I have be with my that a to the

An example: \[ P(A) = 0.001 \quad\quad P(B) = 0.002 \quad\quad P(A) \times P(B) = 0.000002 \]

strong tea — *powerful tea

powerful computer — *strong computer

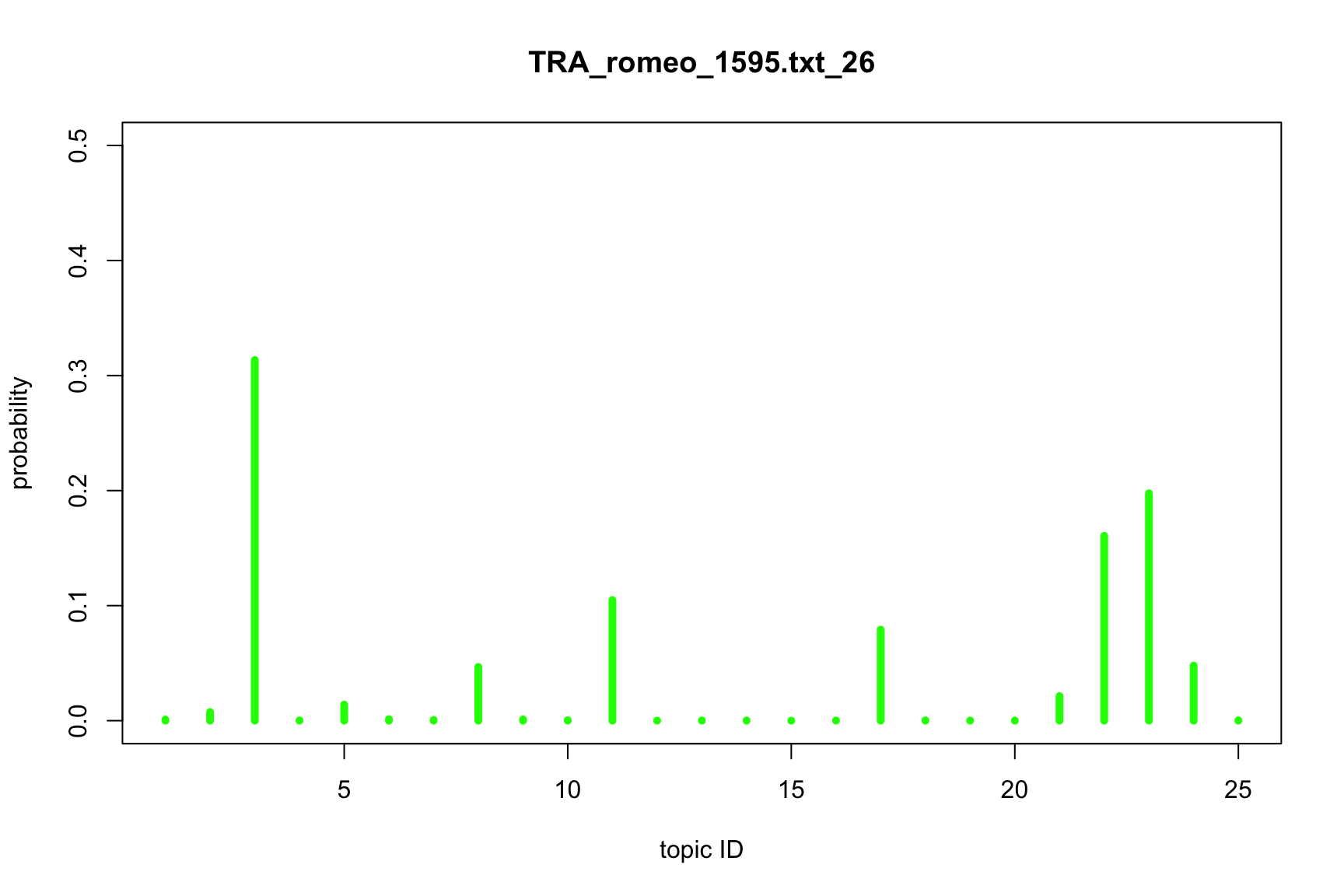

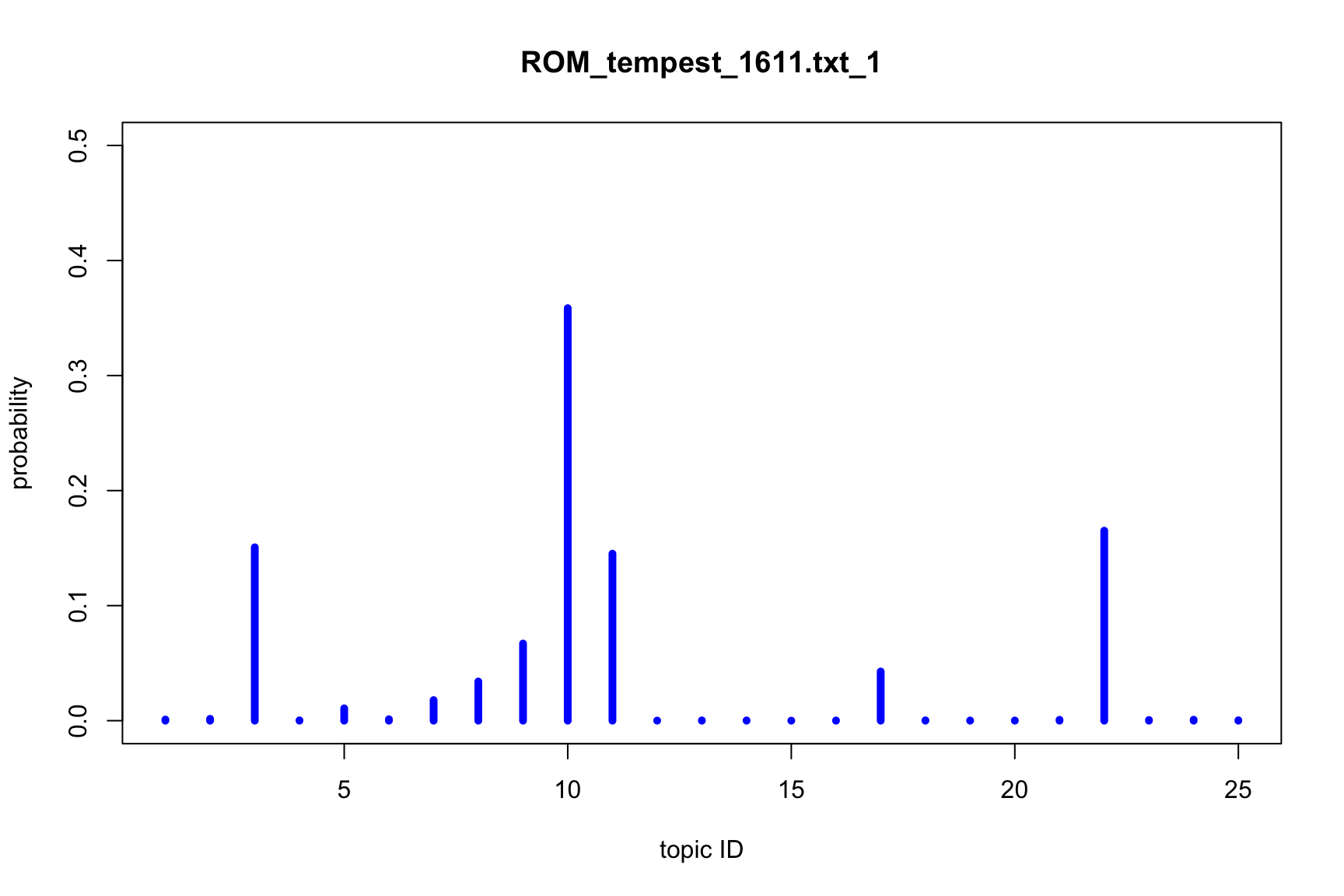

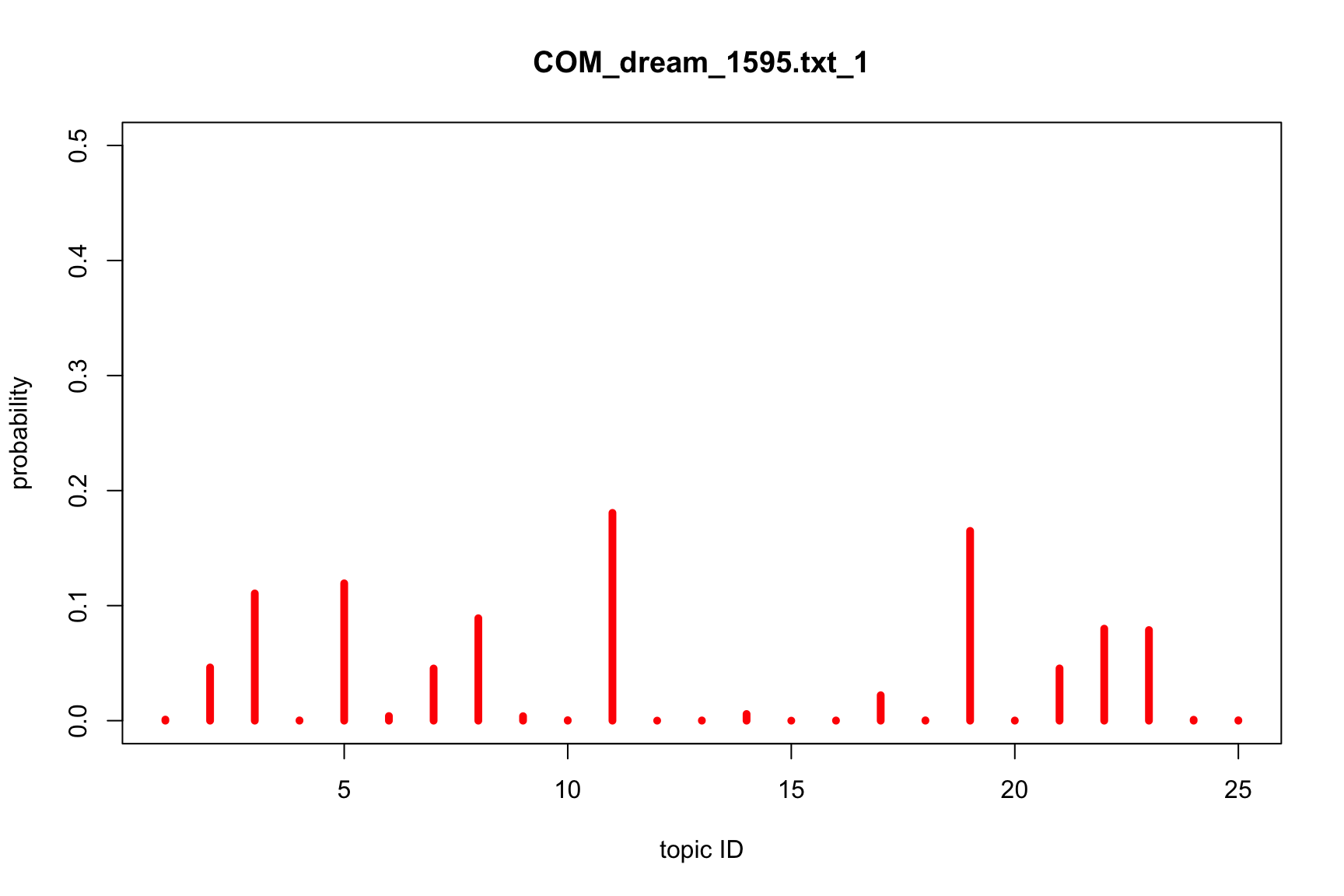

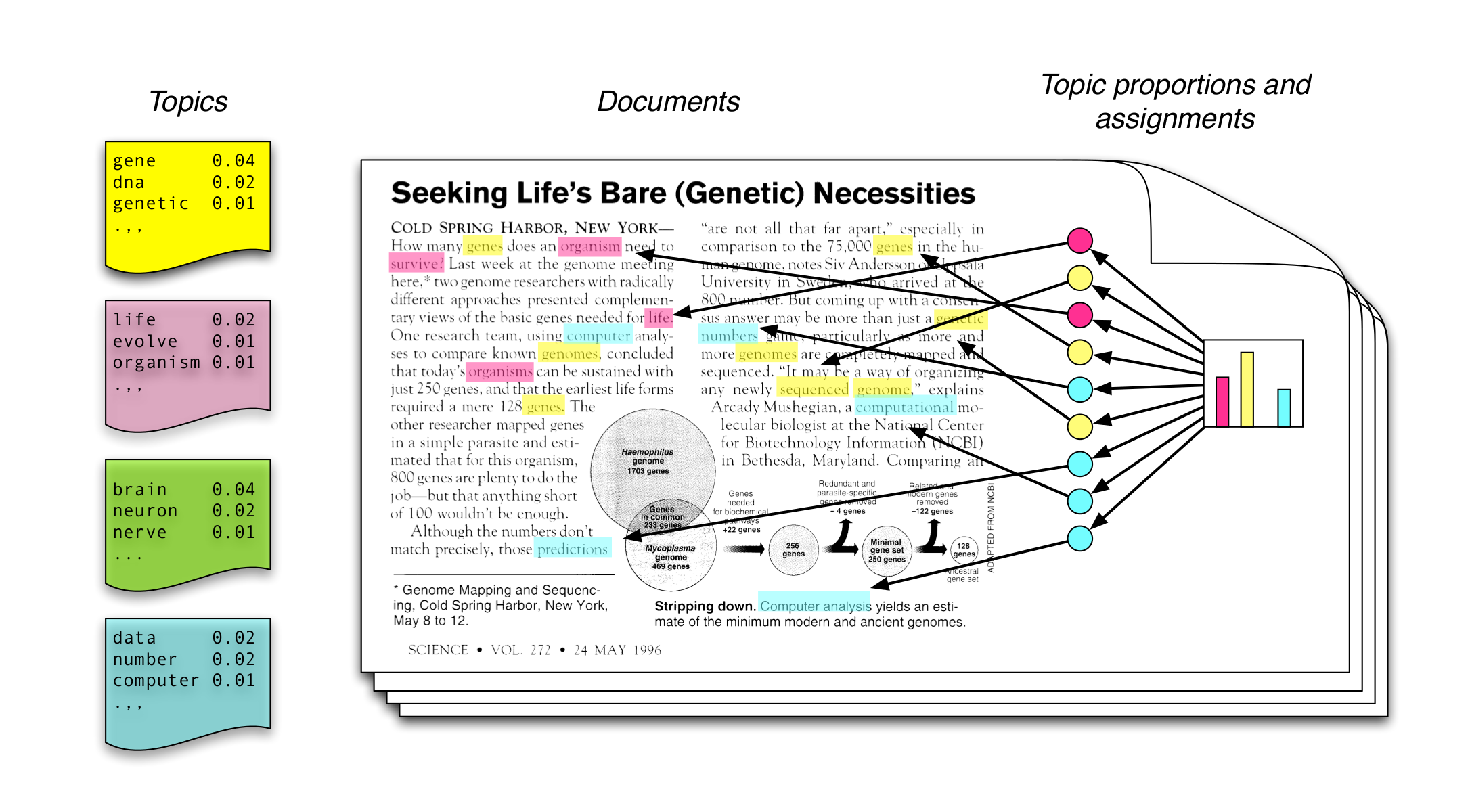

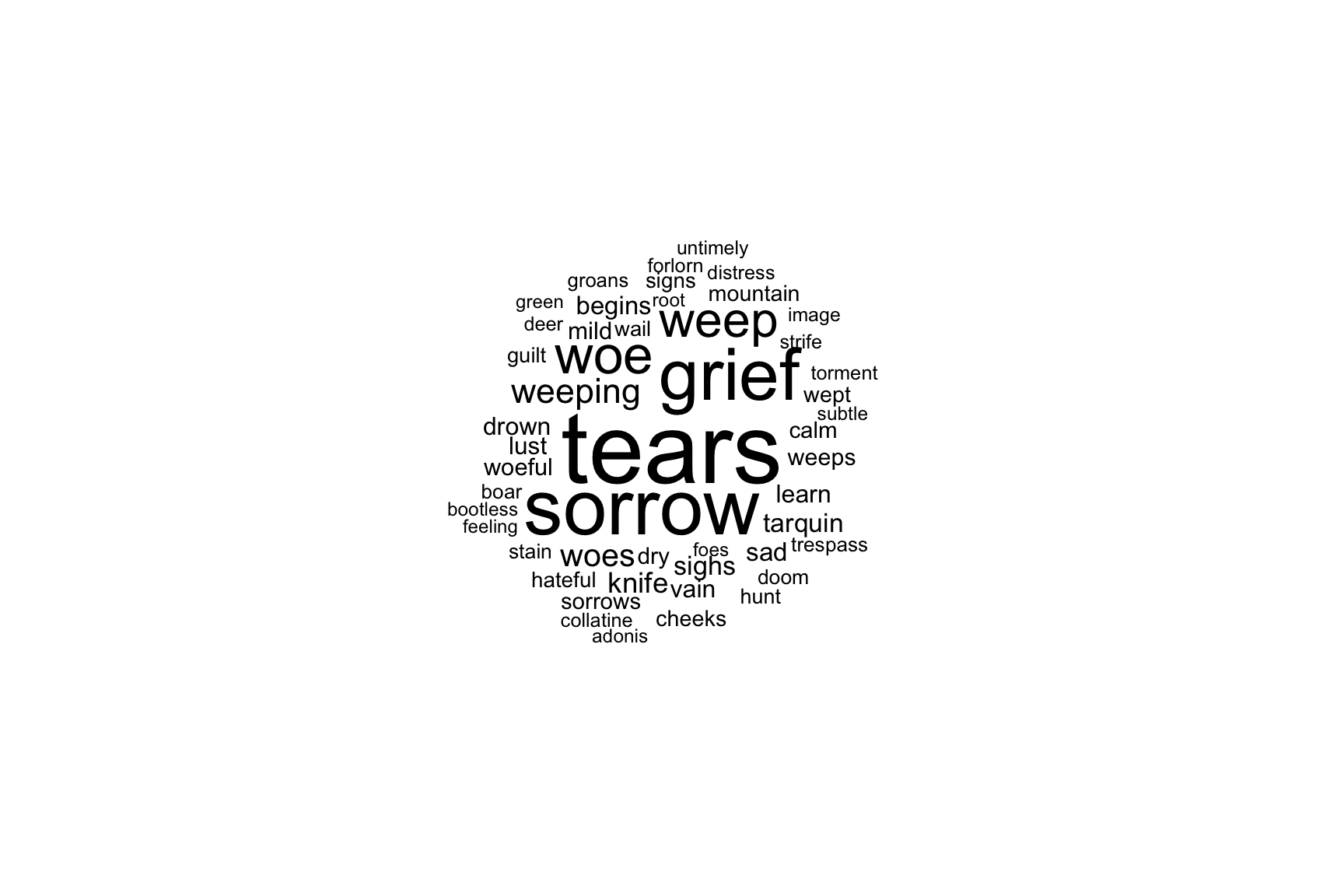

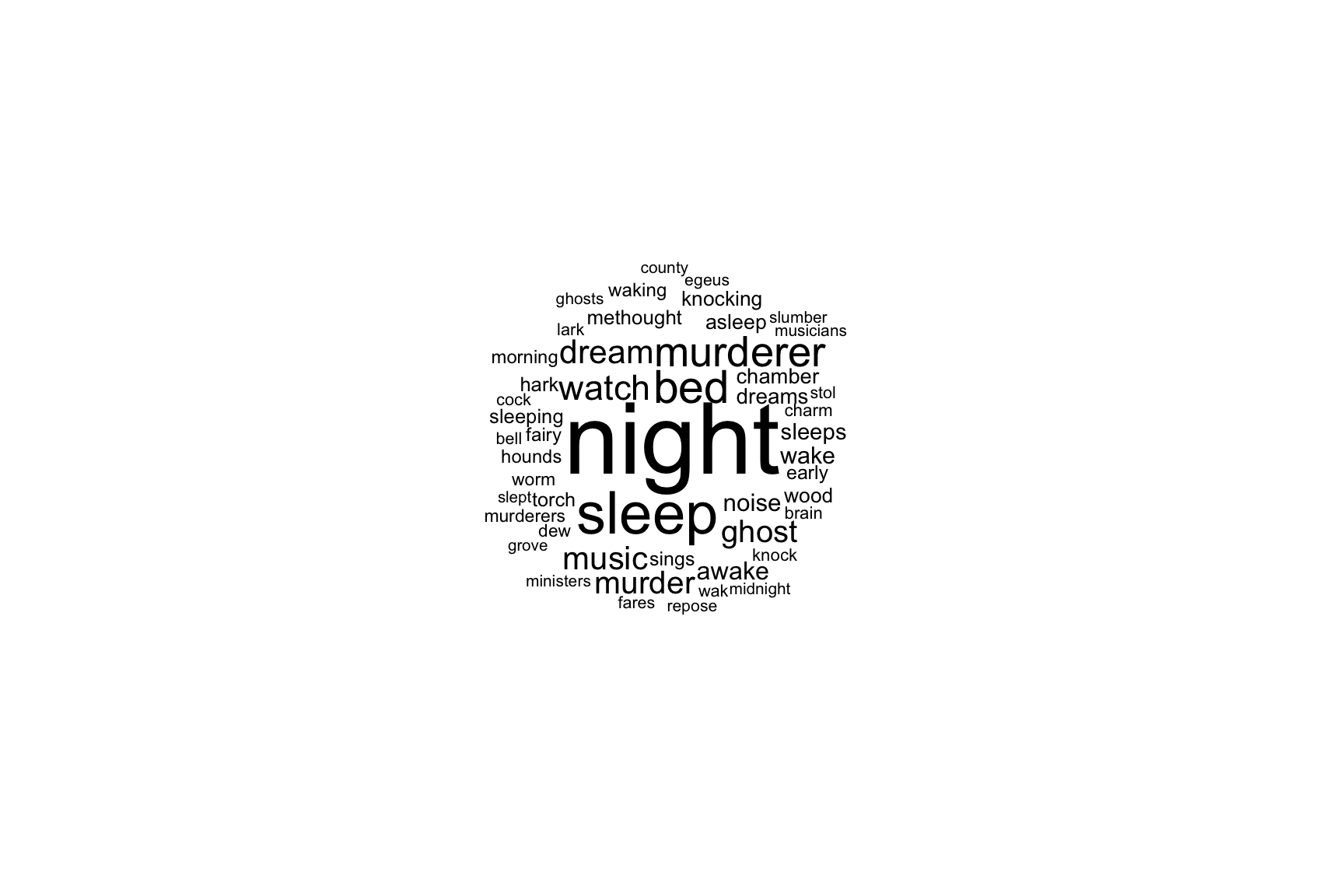

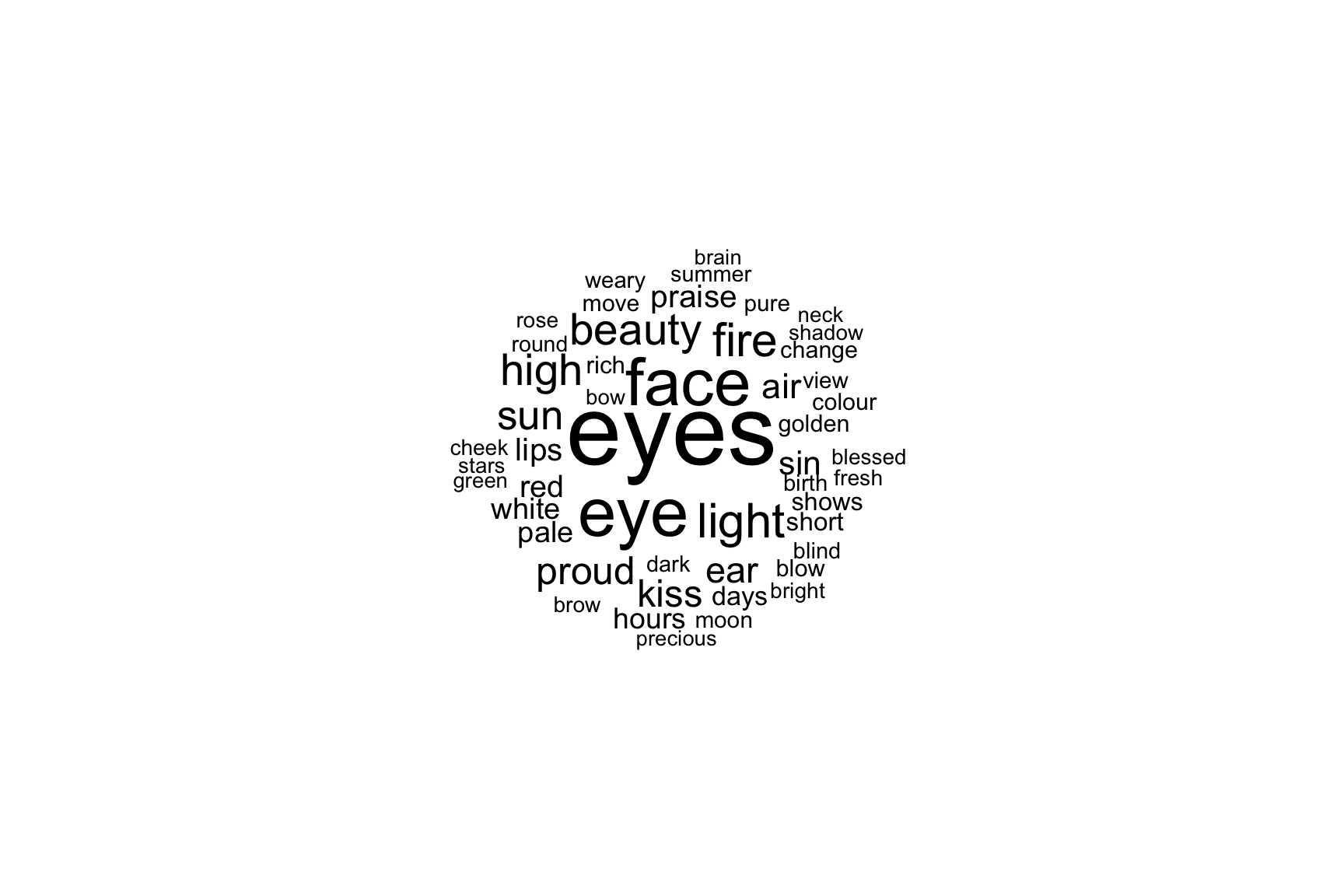

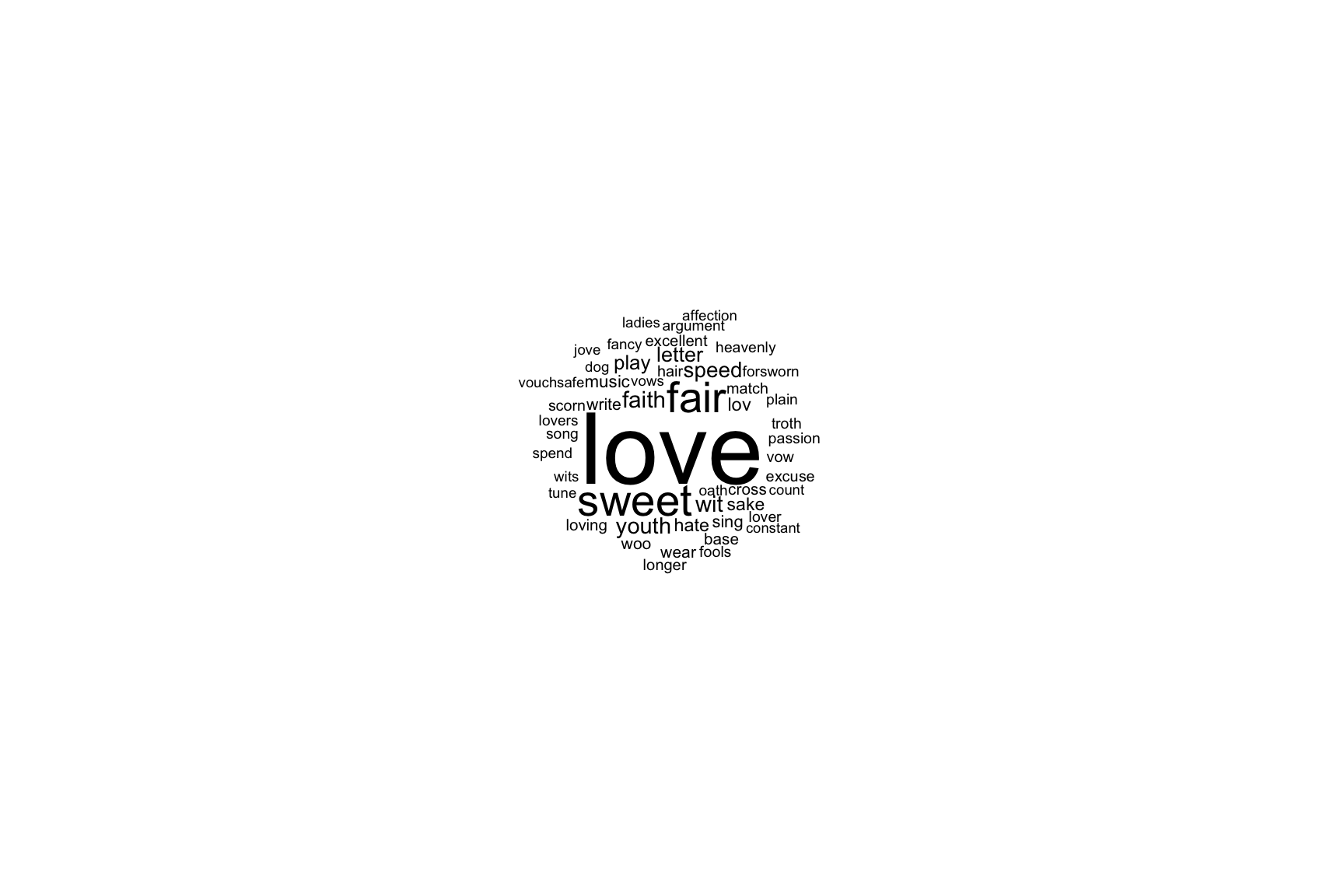

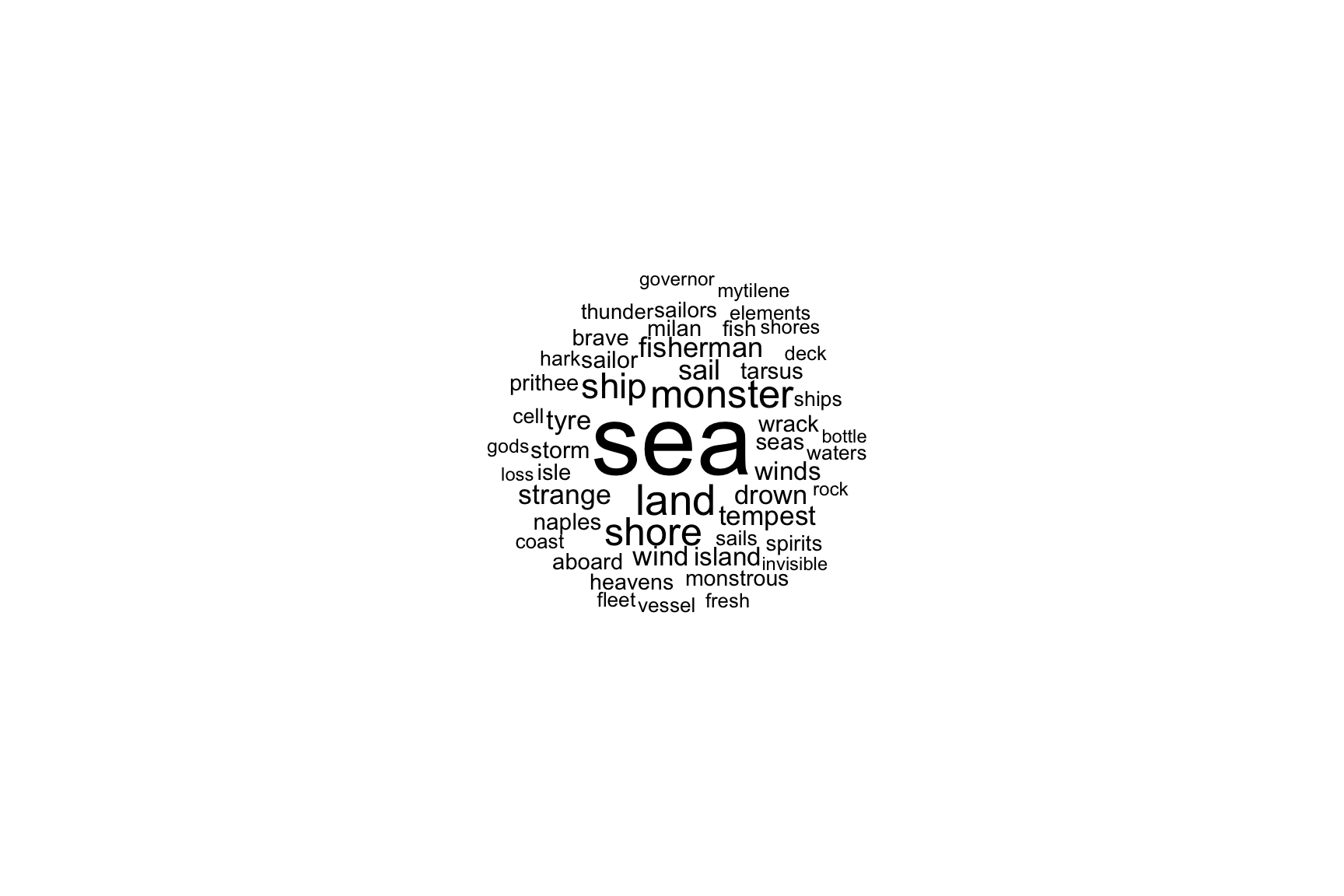

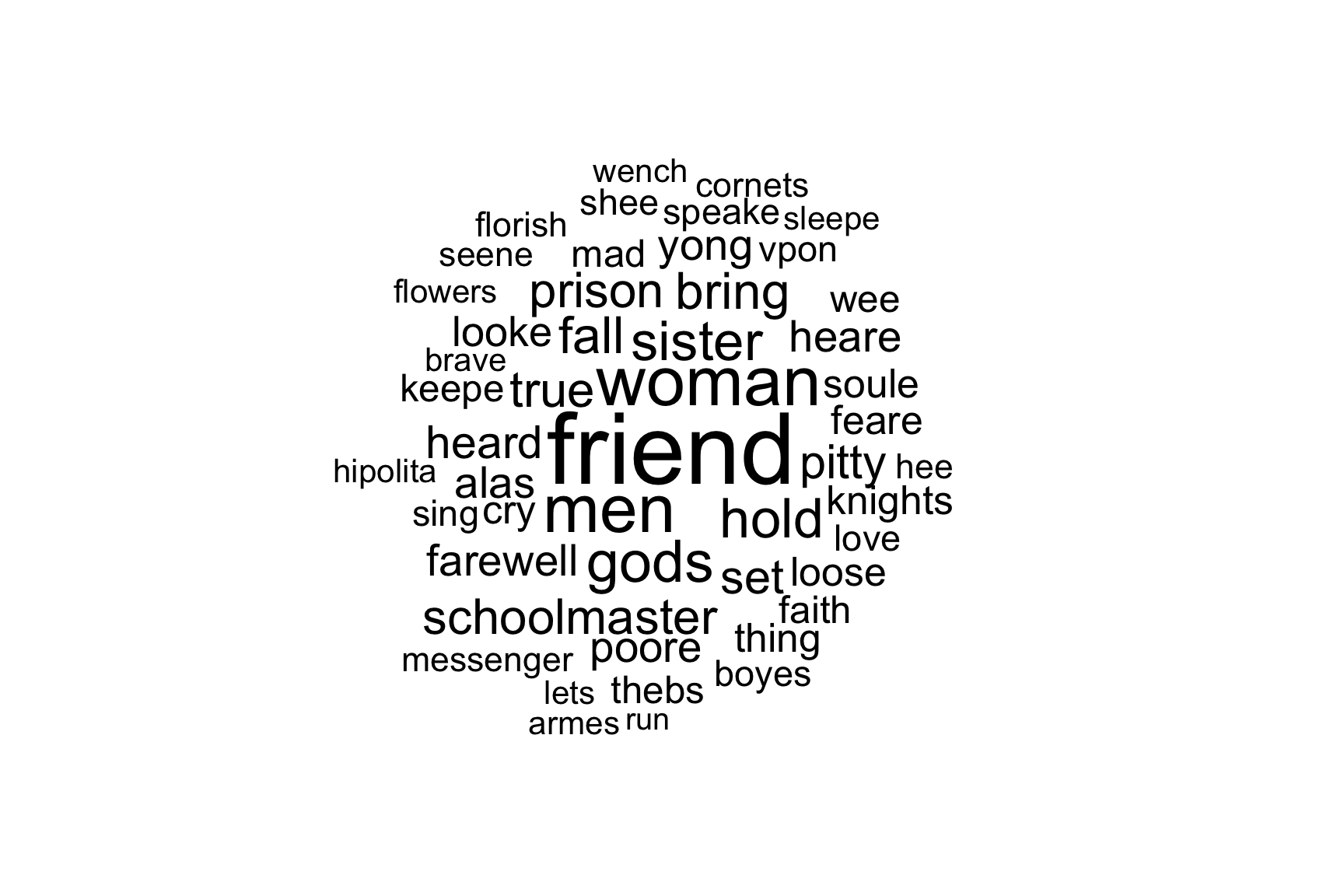

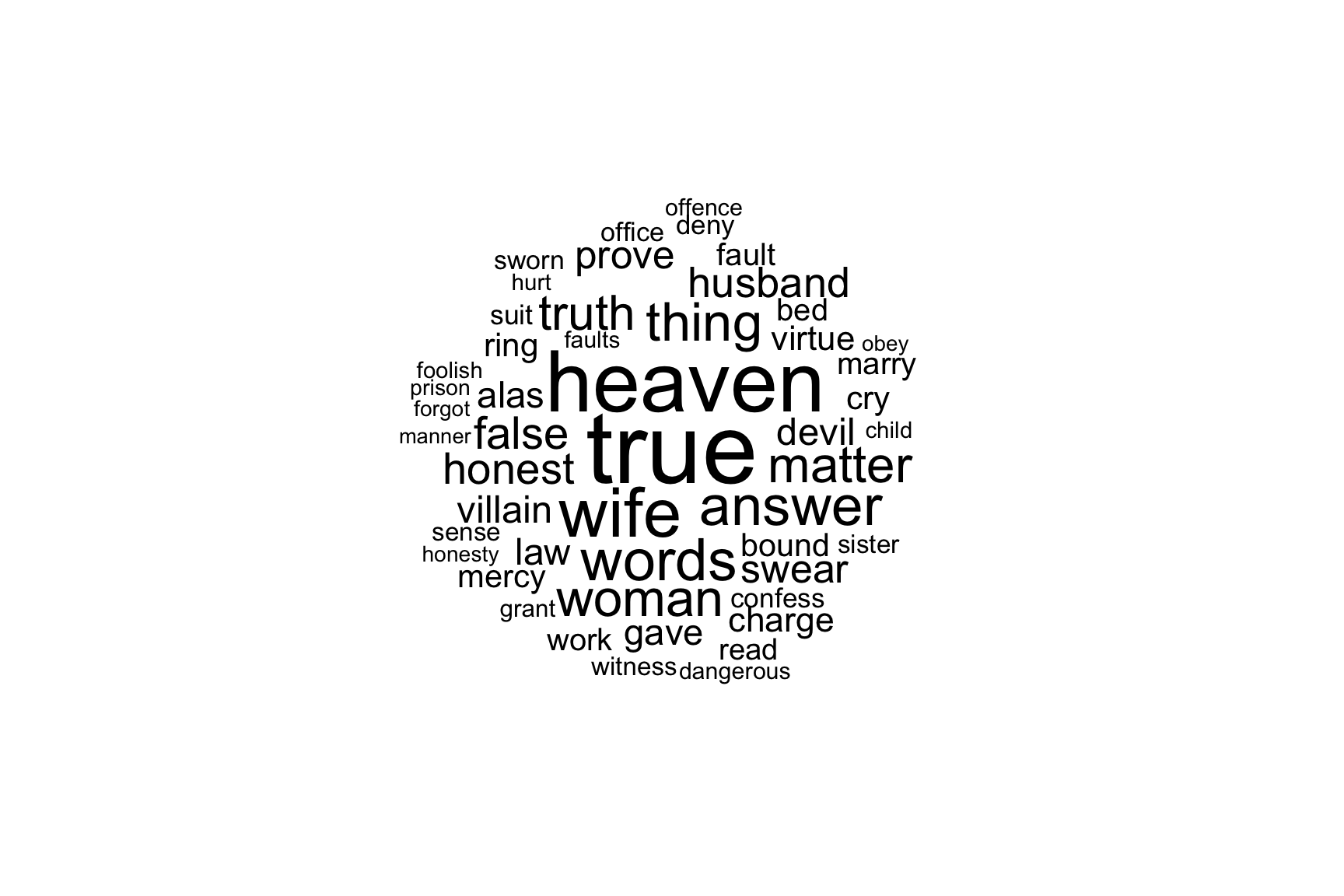

We formally define a topic to be a distribution over a fixed vocabulary. For example, the genetics topic has words about genetics with high probability and the evolutionary biology topic has words about evolutionary biology with high probability.

(Blei 2012, 78)

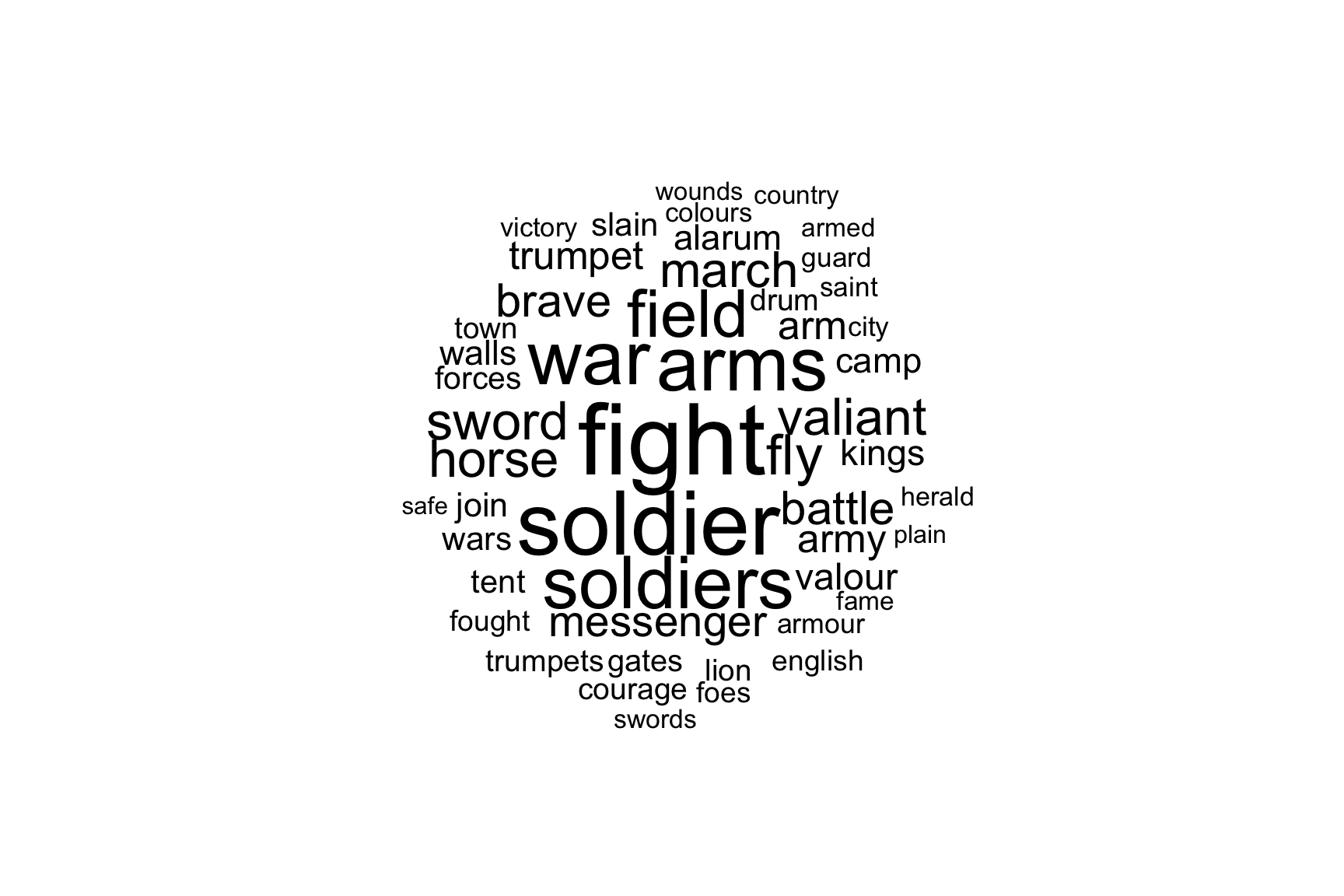

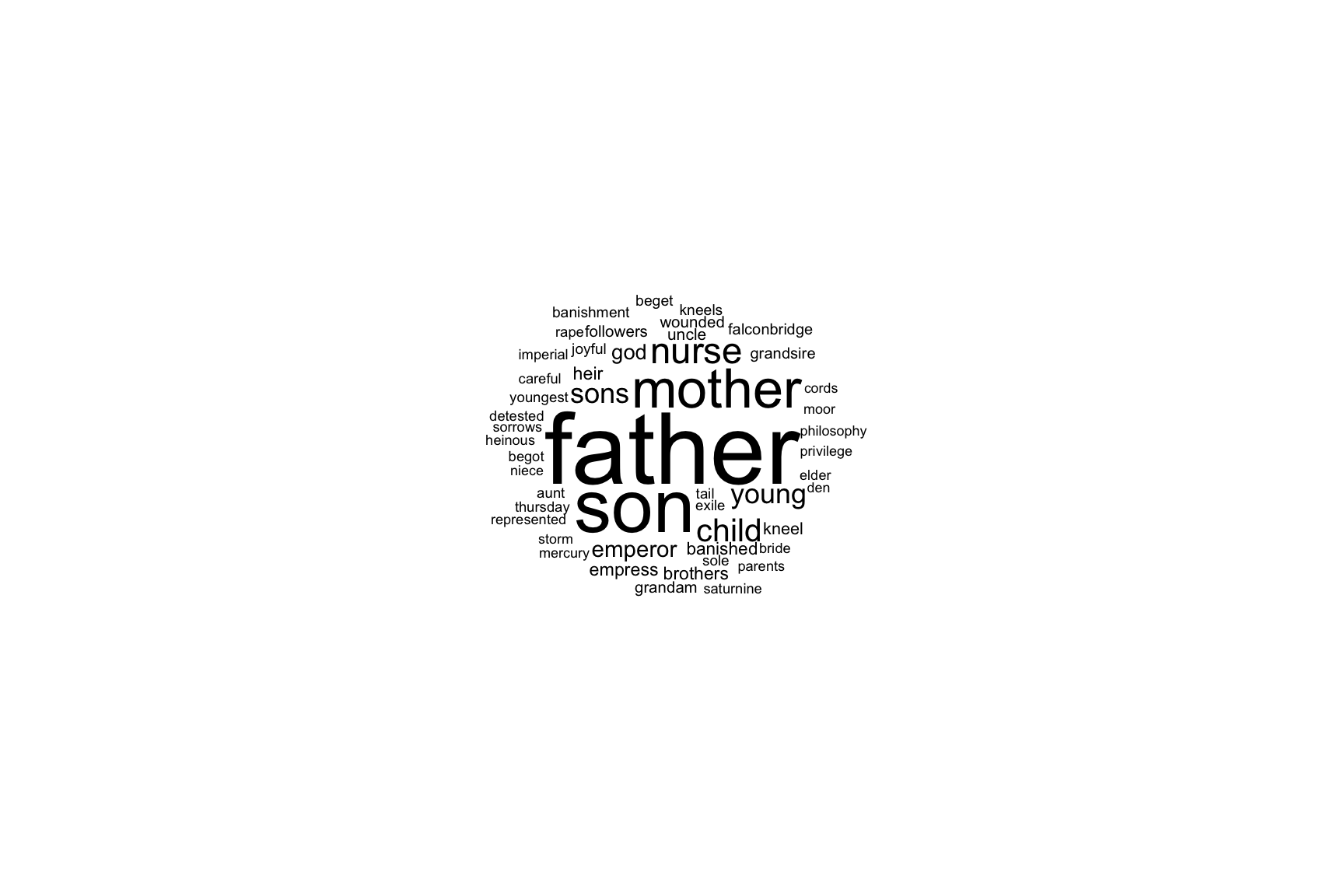

fight soldier arms war soldiers field fly sword horse valiant march battle brave messenger arm army trumpet valour kings camp alarum walls join wars slain tent forces gates drum courage trumpets lion town fought foes english armour city saint guard colours victory herald swords fame armed country wounds plain safe …

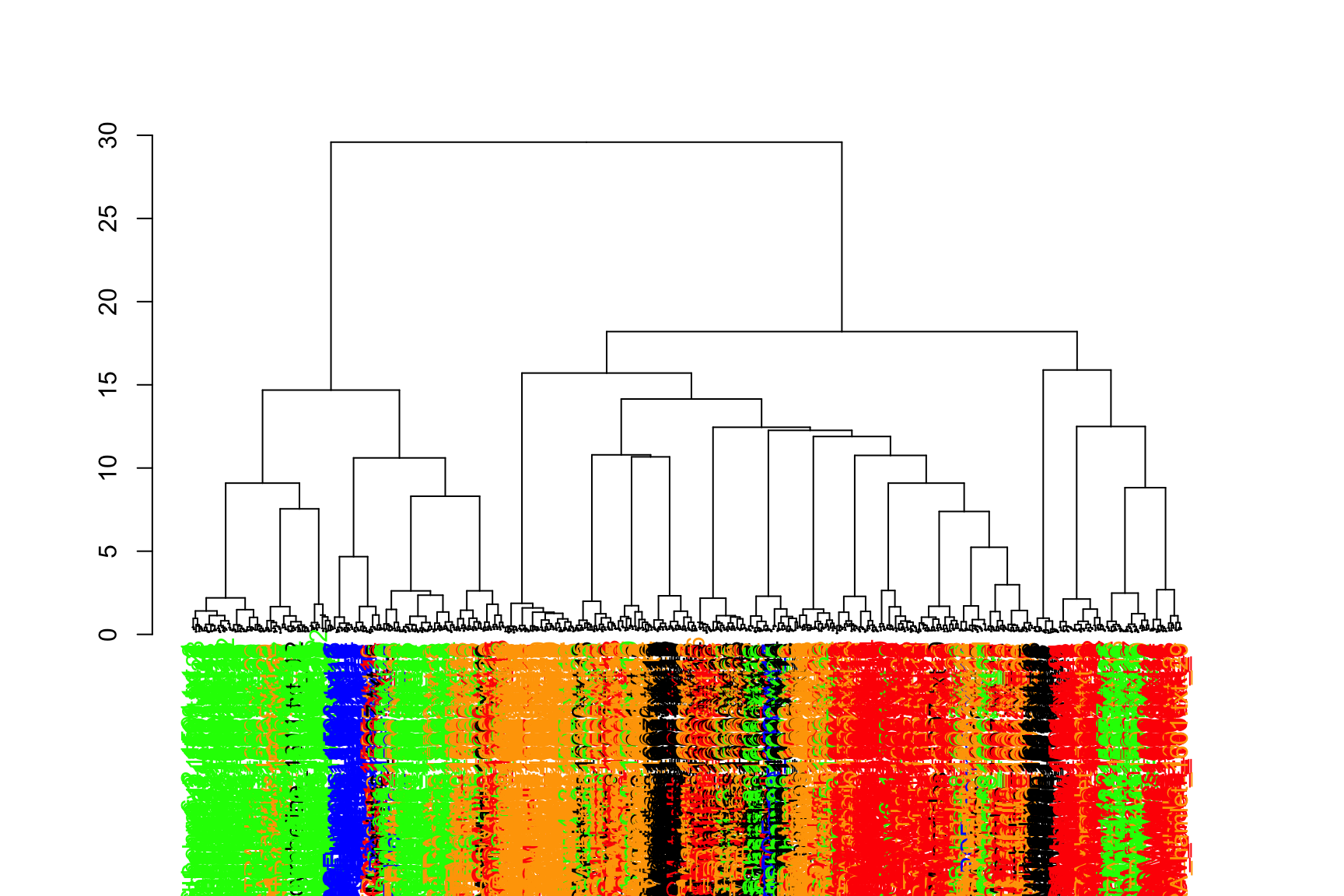

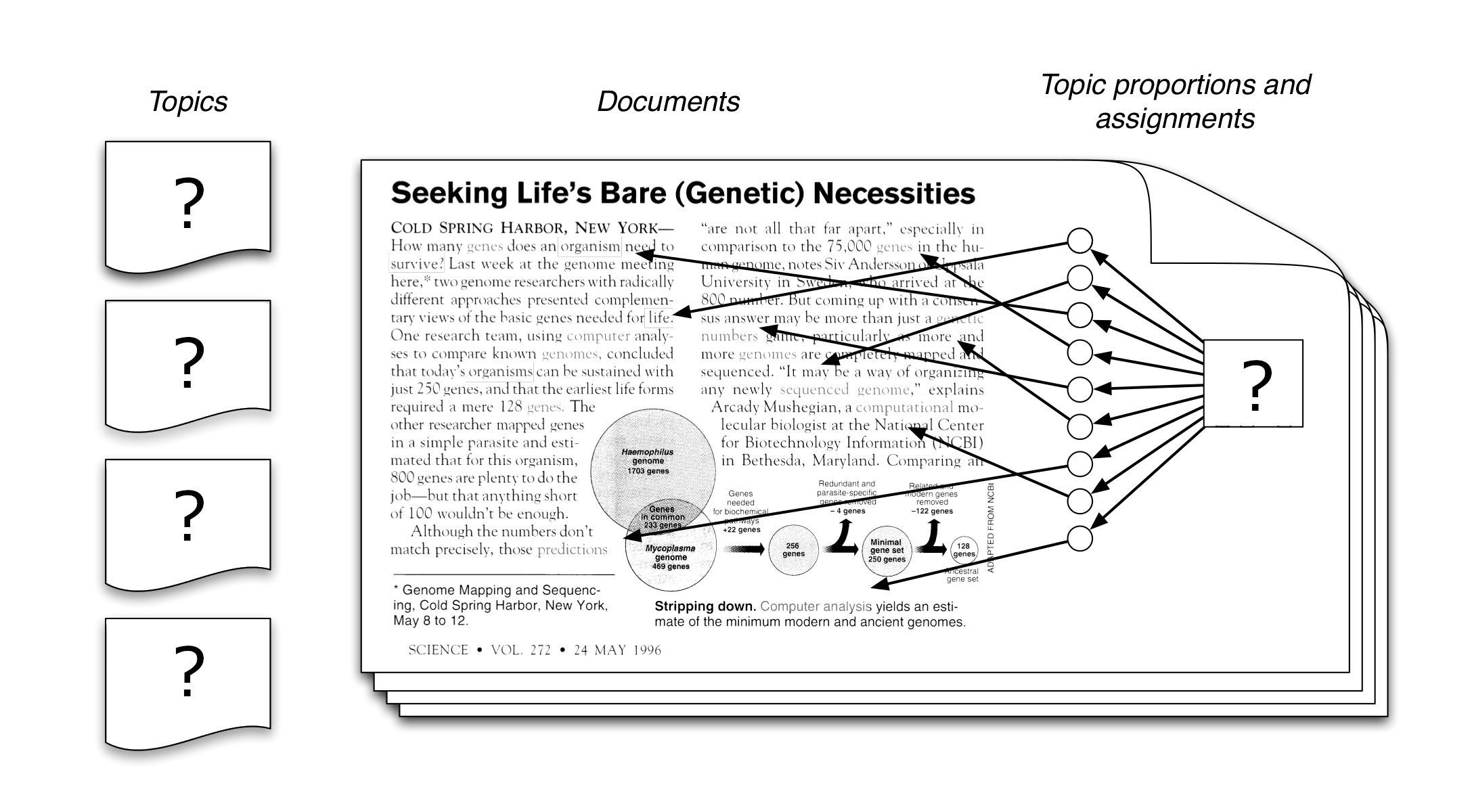

Indeed calling these models “topic models” is retrospective – the topics that emerge from the inference algorithm are interpretable for almost any collection that is analyzed. The fact that these look like topics has to do with the statistical structure of observed language and how it interacts with the specific probabilistic assumptions of LDA.

(Blei 2012, 79)